Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

[ad_1]

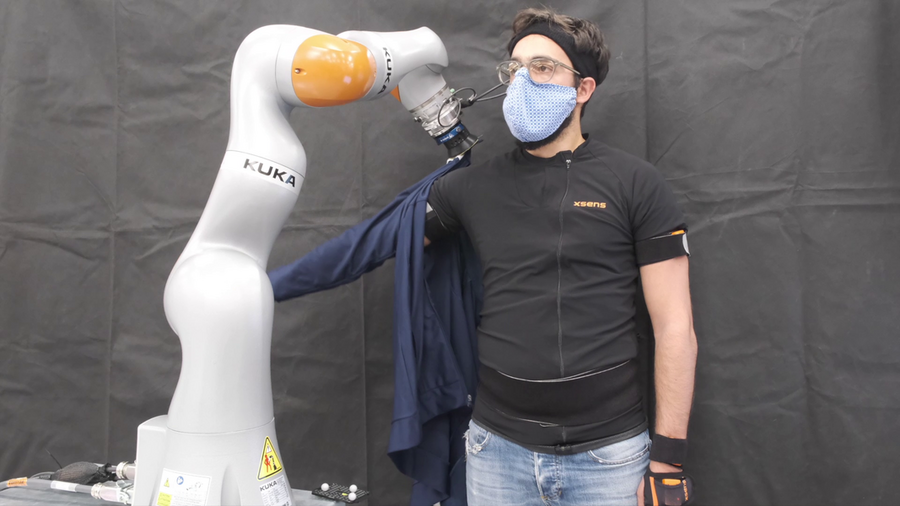

The robotic seen right here can’t see the human arm throughout the complete dressing course of, but it manages to efficiently get a jacket sleeve pulled onto the arm. Picture courtesy of MIT CSAIL.

By Steve Nadis | MIT CSAIL

Robots are already adept at sure issues, comparable to lifting objects which can be too heavy or cumbersome for individuals to handle. One other utility they’re effectively suited to is the precision meeting of things like watches which have massive numbers of tiny components — some so small they’ll barely be seen with the bare eye.

“A lot tougher are duties that require situational consciousness, involving nearly instantaneous diversifications to altering circumstances within the atmosphere,” explains Theodoros Stouraitis, a visiting scientist within the Interactive Robotics Group at MIT’s Laptop Science and Synthetic Intelligence Laboratory (CSAIL).

“Issues develop into much more difficult when a robotic has to work together with a human and work collectively to soundly and efficiently full a job,” provides Shen Li, a PhD candidate within the MIT Division of Aeronautics and Astronautics.

Li and Stouraitis — together with Michael Gienger of the Honda Analysis Institute Europe, Professor Sethu Vijayakumar of the College of Edinburgh, and Professor Julie A. Shah of MIT, who directs the Interactive Robotics Group — have chosen an issue that provides, fairly actually, an armful of challenges: designing a robotic that may assist individuals dress. Final 12 months, Li and Shah and two different MIT researchers accomplished a venture involving robot-assisted dressing with out sleeves. In a brand new work, described in a paper that seems in an April 2022 concern of IEEE Robotics and Automation, Li, Stouraitis, Gienger, Vijayakumar, and Shah clarify the headway they’ve made on a extra demanding downside — robot-assisted dressing with sleeved garments.

The large distinction within the latter case is because of “visible occlusion,” Li says. “The robotic can’t see the human arm throughout the complete dressing course of.” Specifically, it can’t at all times see the elbow or decide its exact place or bearing. That, in flip, impacts the quantity of power the robotic has to use to drag the article of clothes — comparable to a long-sleeve shirt — from the hand to the shoulder.

To take care of obstructed imaginative and prescient in attempting to decorate a human, an algorithm takes a robotic’s measurement of the power utilized to a jacket sleeve as enter after which estimates the elbow’s place. Picture: MIT CSAIL

To take care of the problem of obstructed imaginative and prescient, the group has developed a “state estimation algorithm” that permits them to make fairly exact educated guesses as to the place, at any given second, the elbow is and the way the arm is inclined — whether or not it’s prolonged straight out or bent on the elbow, pointing upwards, downwards, or sideways — even when it’s fully obscured by clothes. At every occasion of time, the algorithm takes the robotic’s measurement of the power utilized to the material as enter after which estimates the elbow’s place — not precisely, however inserting it inside a field or quantity that encompasses all doable positions.

That information, in flip, tells the robotic how you can transfer, Stouraitis says. “If the arm is straight, then the robotic will observe a straight line; if the arm is bent, the robotic should curve across the elbow.” Getting a dependable image is essential, he provides. “If the elbow estimation is unsuitable, the robotic might determine on a movement that will create an extreme, and unsafe, power.”

The algorithm features a dynamic mannequin that predicts how the arm will transfer sooner or later, and every prediction is corrected by a measurement of the power that’s being exerted on the material at a selected time. Whereas different researchers have made state estimation predictions of this kind, what distinguishes this new work is that the MIT investigators and their companions can set a transparent higher restrict on the uncertainty and assure that the elbow might be someplace inside a prescribed field.

The mannequin for predicting arm actions and elbow place and the mannequin for measuring the power utilized by the robotic each incorporate machine studying strategies. The information used to coach the machine studying programs have been obtained from individuals sporting “Xsens” fits with built-sensors that precisely monitor and file physique actions. After the robotic was skilled, it was in a position to infer the elbow pose when placing a jacket on a human topic, a person who moved his arm in numerous methods throughout the process — typically in response to the robotic’s tugging on the jacket and typically participating in random motions of his personal accord.

This work was strictly targeted on estimation — figuring out the situation of the elbow and the arm pose as precisely as doable — however Shah’s group has already moved on to the subsequent section: creating a robotic that may regularly modify its actions in response to shifts within the arm and elbow orientation.

Sooner or later, they plan to handle the problem of “personalization” — creating a robotic that may account for the idiosyncratic methods by which completely different individuals transfer. In an analogous vein, they envision robots versatile sufficient to work with a various vary of fabric supplies, every of which can reply considerably in a different way to pulling.

Though the researchers on this group are positively excited by robot-assisted dressing, they acknowledge the expertise’s potential for much broader utility. “We didn’t specialize this algorithm in any strategy to make it work just for robotic dressing,” Li notes. “Our algorithm solves the overall state estimation downside and will due to this fact lend itself to many doable purposes. The important thing to all of it is being able to guess, or anticipate, the unobservable state.” Such an algorithm might, for example, information a robotic to acknowledge the intentions of its human associate as it really works collaboratively to maneuver blocks round in an orderly method or set a dinner desk.

Right here’s a conceivable situation for the not-too-distant future: A robotic might set the desk for dinner and possibly even clear up the blocks your youngster left on the eating room ground, stacking them neatly within the nook of the room. It might then aid you get your dinner jacket on to make your self extra presentable earlier than the meal. It would even carry the platters to the desk and serve applicable parts to the diners. One factor the robotic wouldn’t do can be to eat up all of the meals earlier than you and others make it to the desk. Thankfully, that’s one “app” — as in utility slightly than urge for food — that isn’t on the drafting board.

This analysis was supported by the U.S. Workplace of Naval Analysis, the Alan Turing Institute, and the Honda Analysis Institute Europe.

tags: c-Analysis-Innovation, Manipulation

MIT Information

[ad_2]