Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

[ad_1]

LiDAR and visible cameras are two varieties of complementary sensors used for 3D object detection in autonomous automobiles and robots. LiDAR, which is a distant sensing method that makes use of gentle within the type of a pulsed laser to measure ranges, supplies low-resolution form and depth info, whereas cameras present high-resolution form and texture info. Whereas the options captured by LiDAR and cameras ought to be merged collectively to offer optimum 3D object detection, it seems that most state-of-the-art 3D object detectors use LiDAR as the one enter. The principle motive is that to develop sturdy 3D object detection fashions, most strategies want to enhance and rework the info from each modalities, making the correct alignment of the options difficult.

Current algorithms for fusing LiDAR and digicam outputs, resembling PointPainting, PointAugmenting, EPNet, 4D-Internet and ContinuousFusion, usually observe two approaches — input-level fusion the place the options are fused at an early stage, adorning factors within the LiDAR level cloud with the corresponding digicam options, or mid-level fusion the place options are extracted from each sensors after which mixed. Regardless of realizing the significance of efficient alignment, these strategies battle to effectively course of the widespread situation the place options are enhanced and aggregated earlier than fusion. This means that successfully fusing the alerts from each sensors won’t be simple and stays difficult.

In our CVPR 2022 paper, “DeepFusion: LiDAR-Digicam Deep Fusion for Multi-Modal 3D Object Detection”, we introduce a totally end-to-end multi-modal 3D detection framework referred to as DeepFusion that applies a easy but efficient deep-level characteristic fusion technique to unify the alerts from the 2 sensing modalities. In contrast to standard approaches that beautify uncooked LiDAR level clouds with manually chosen digicam options, our methodology fuses the deep digicam and deep LiDAR options in an end-to-end framework. We start by describing two novel strategies, InverseAug and LearnableAlign, that enhance the standard of characteristic alignment and are utilized to the event of DeepFusion. We then exhibit state-of-the-art efficiency by DeepFusion on the Waymo Open Dataset, one of many largest datasets for automotive 3D object detection.

InverseAug: Correct Alignment below Geometric Augmentation

To attain good efficiency on present 3D object detection benchmarks for autonomous automobiles, most strategies require sturdy information augmentation throughout coaching to keep away from overfitting. Nevertheless, the need of knowledge augmentation poses a non-trivial problem within the DeepFusion pipeline. Particularly, the info from the 2 modalities use completely different augmentation methods, e.g., rotating alongside the z-axis for 3D level clouds mixed with random flipping for 2D digicam photographs, usually leading to alignment that’s inaccurate. Then the augmented LiDAR information has to undergo a voxelization step that converts the purpose clouds into quantity information saved in a 3 dimensional array of voxels. The voxelized options are fairly completely different in comparison with the uncooked information, making the alignment much more troublesome. To deal with the alignment concern brought on by geometry-related information augmentation, we introduce Inverse Augmentation (InverseAug), a way used to reverse the augmentation earlier than fusion throughout the mannequin’s coaching section.

Within the instance beneath, we exhibit the difficulties in aligning the augmented LiDAR information with the digicam information. On this case, the LiDAR level cloud is augmented by rotation with the outcome {that a} given 3D key level, which might be any 3D coordinate, resembling a LiDAR information level, can’t be simply aligned in 2D area merely via use of the unique LiDAR and digicam parameters. To make the localization possible, InverseAug first shops the augmentation parameters earlier than making use of the geometry-related information augmentation. On the fusion stage, it reverses all information augmentation to get the unique coordinate for the 3D key level, after which finds its corresponding 2D coordinates within the digicam area.

|

| Throughout coaching, InverseAug resolves the incorrect alignment from geometric augmentation. |

|

| Left: Alignment with out InverseAug. Proper: Alignment high quality enchancment with InverseAug. |

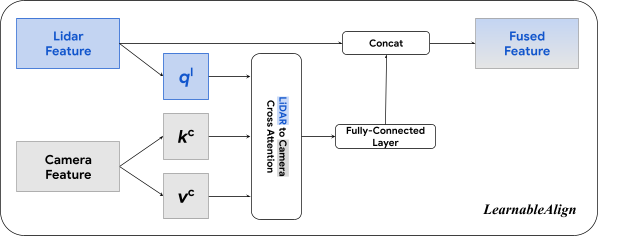

LearnableAlign: A Cross-Modality-Consideration Module to Study Alignment

We additionally introduce Learnable Alignment (LearnableAlign), a cross-modality-attention–primarily based feature-level alignment method, to enhance the alignment high quality. For input-level fusion strategies, resembling PointPainting and PointAugmenting, given a 3D LiDAR level, solely the corresponding digicam pixel could be precisely situated as there’s a one-to-one mapping. In distinction, when fusing deep options within the DeepFusion pipeline, every LiDAR characteristic represents a voxel containing a subset of factors, and therefore, its corresponding digicam pixels are in a polygon. So the alignment turns into the issue of studying the mapping between a voxel cell and a set of pixels.

A naïve method is to common over all pixels akin to the given voxel. Nevertheless, intuitively, and as supported by our visualized outcomes, these pixels should not equally vital as a result of the knowledge from the LiDAR deep characteristic unequally aligns with each digicam pixel. For instance, some pixels could include important info for detection (e.g., the goal object), whereas others could also be much less informative (e.g., consisting of backgrounds resembling roads, vegetation, occluders, and so on.).

LearnableAlign leverages a cross-modality consideration mechanism to dynamically seize the correlations between two modalities. Right here, the enter accommodates the LiDAR options in a voxel cell, and all its corresponding digicam options. The output of the eye is basically a weighted sum of the digicam options, the place the weights are collectively decided by a perform of the LiDAR and digicam options. Extra particularly, LearnableAlign makes use of three fully-connected layers to respectively rework the LiDAR options to a vector (ql), and digicam options to vectors (okayc) and (vc). For every vector (ql), we compute the dot merchandise between (ql) and (okayc) to acquire the eye affinity matrix that accommodates correlations between the LiDAR options and the corresponding digicam options. Normalized by a softmax operator, the eye affinity matrix is then used to calculate weights and combination the vectors (vc) that include digicam info. The aggregated digicam info is then processed by a fully-connected layer, and concatenated (Concat) with the unique LiDAR characteristic. The output is then fed into any normal 3D detection framework, resembling PointPillars or CenterPoint for mannequin coaching.

|

| LearnableAlign leverages the cross-attention mechanism to align LiDAR and digicam options. |

DeepFusion: A Higher Method to Fuse Data from Totally different Modalities

Powered by our two novel characteristic alignment strategies, we develop DeepFusion, a totally end-to-end multi-modal 3D detection framework. Within the DeepFusion pipeline, the LiDAR factors are first fed into an present characteristic extractor (e.g., pillar characteristic web from PointPillars) to acquire LiDAR options (e.g., pseudo-images). Within the meantime, the digicam photographs are fed right into a 2D picture characteristic extractor (e.g., ResNet) to acquire digicam options. Then, InverseAug and LearnableAlign are utilized to be able to fuse the digicam and LiDAR options collectively. Lastly, the fused options are processed by the remaining parts of the chosen 3D detection mannequin (e.g., the spine and detection head from PointPillars) to acquire the detection outcomes.

|

| The pipeline of DeepFusion. |

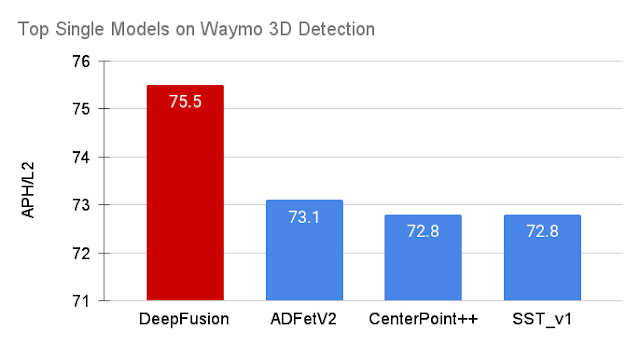

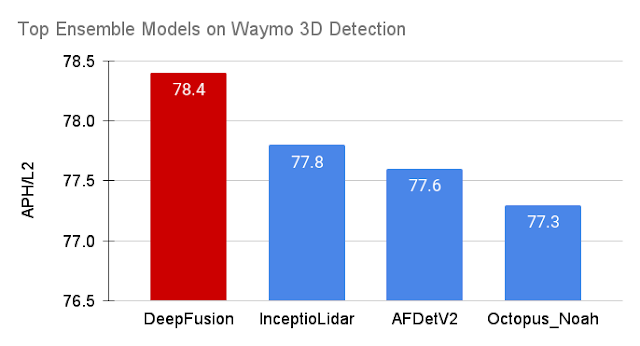

Benchmark Outcomes

We consider DeepFusion on the Waymo Open Dataset, one of many largest 3D detection challenges for autonomous automobiles, utilizing the Common Precision with Heading (APH) metric below issue stage 2, the default metric to rank a mannequin’s efficiency on the leaderboard. Among the many 70 collaborating groups everywhere in the world, the DeepFusion single and ensemble fashions obtain state-of-the-art efficiency of their corresponding classes.

|

| The one DeepFusion mannequin achieves new state-of-the-art efficiency on Waymo Open Dataset. |

|

| The Ensemble DeepFusion mannequin outperforms all different strategies on Waymo Open Dataset, rating No. 1 on the leaderboard. |

The Influence of InverseAug and LearnableAlign

We additionally conduct ablation research on the effectiveness of the proposed InverseAug and LearnableAlign strategies. We exhibit that each InverseAug and LearnableAlign individually contribute to a efficiency achieve over the LiDAR-only mannequin, and mixing each can additional yield an much more important enhance.

|

| Ablation research on InverseAug (IA) and LearnableAlign (LA) measured in common precision (AP) and APH. Combining each strategies contributes to the very best efficiency achieve. |

Conclusion

We exhibit that late-stage deep characteristic fusion could be simpler when options are aligned nicely, however aligning options from two completely different modalities could be difficult. To deal with this problem, we suggest two strategies, InverseAug and LearnableAlign, to enhance the standard of alignment amongst multimodal options. By integrating these strategies into the fusion stage of our proposed DeepFusion methodology, we obtain state-of-the-art efficiency on the Waymo Open Dataset.

Acknowledgements:

Particular due to co-authors Tianjian Meng, Ben Caine, Jiquan Ngiam, Daiyi Peng, Junyang Shen, Bo Wu, Yifeng Lu, Denny Zhou, Quoc Le, Alan Yuille, Mingxing Tan.

[ad_2]