Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

[ad_1]

Machine studying (ML) has grow to be outstanding in info expertise, which has led some to lift considerations concerning the related rise within the prices of computation, primarily the carbon footprint, i.e., whole greenhouse fuel emissions. Whereas these assertions rightfully elevated the dialogue round carbon emissions in ML, in addition they spotlight the necessity for correct knowledge to evaluate true carbon footprint, which will help establish methods to mitigate carbon emission in ML.

In “The Carbon Footprint of Machine Studying Coaching Will Plateau, Then Shrink”, accepted for publication in IEEE Laptop, we deal with operational carbon emissions — i.e., the vitality price of working ML {hardware}, together with knowledge middle overheads — from coaching of pure language processing (NLP) fashions and examine greatest practices that would cut back the carbon footprint. We show 4 key practices that cut back the carbon (and vitality) footprint of ML workloads by giant margins, which we’ve got employed to assist hold ML underneath 15% of Google’s whole vitality use.

The 4Ms: Greatest Practices to Cut back Vitality and Carbon Footprints

We recognized 4 greatest practices that cut back vitality and carbon emissions considerably — we name these the “4Ms” — all of that are getting used at Google at present and can be found to anybody utilizing Google Cloud companies.

These 4 practices collectively can cut back vitality by 100x and emissions by 1000x.

Observe that Google matches 100% of its operational vitality use with renewable vitality sources. Typical carbon offsets are often retrospective as much as a yr after the carbon emissions and could be bought wherever on the identical continent. Google has dedicated to decarbonizing all vitality consumption in order that by 2030, it is going to function on 100% carbon-free vitality, 24 hours a day on the identical grid the place the vitality is consumed. Some Google knowledge facilities already function on 90% carbon-free vitality; the general common was 61% carbon-free vitality in 2019 and 67% in 2020.

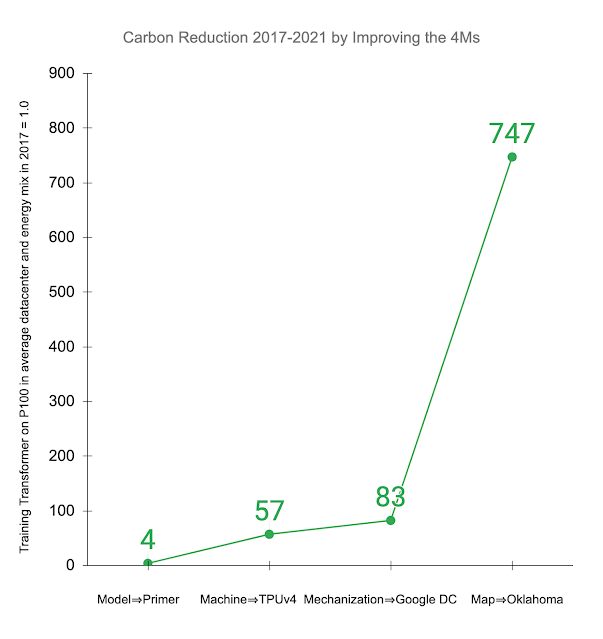

Under, we illustrate the influence of enhancing the 4Ms in observe. Different research examined coaching the Transformer mannequin on an Nvidia P100 GPU in a median knowledge middle and vitality combine per the worldwide common. The lately launched Primer mannequin reduces the computation wanted to attain the identical accuracy by 4x. Utilizing newer-generation ML {hardware}, like TPUv4, gives an extra 14x enchancment over the P100, or 57x general. Environment friendly cloud knowledge facilities acquire 1.4x over the common knowledge middle, leading to a complete vitality discount of 83x. As well as, utilizing a knowledge middle with a low-carbon vitality supply can cut back the carbon footprint one other 9x, leading to a 747x whole discount in carbon footprint over 4 years.

|

| Discount in gross carbon dioxide equal emissions (CO2e) from making use of the 4M greatest practices to the Transformer mannequin skilled on P100 GPUs in a median knowledge middle in 2017, as accomplished in different research. Displayed values are the cumulative enchancment successively addressing every of the 4Ms: updating the mannequin to Primer; upgrading the ML accelerator to TPUv4; utilizing a Google knowledge middle with higher PUE than common; and coaching in a Google Oklahoma knowledge middle that makes use of very clear vitality. |

Total Vitality Consumption for ML

Google’s whole vitality utilization will increase yearly, which isn’t shocking contemplating elevated use of its companies. ML workloads have grown quickly, as has the computation per coaching run, however being attentive to the 4Ms — optimized fashions, ML-specific {hardware}, environment friendly knowledge facilities — has largely compensated for this elevated load. Our knowledge exhibits that ML coaching and inference are solely 10%–15% of Google’s whole vitality use for every of the final three years, annually cut up ⅗ for inference and ⅖ for coaching.

Prior Emission Estimates

Google makes use of neural structure search (NAS) to seek out higher ML fashions. NAS is often carried out as soon as per drawback area/search house mixture, and the ensuing mannequin can then be reused for hundreds of functions — e.g., the Developed Transformer mannequin discovered by NAS is open sourced for all to make use of. Because the optimized mannequin discovered by NAS is usually extra environment friendly, the one time price of NAS is often greater than offset by emission reductions from subsequent use.

A examine from the College of Massachusetts (UMass) estimated carbon emissions for the Developed Transformer NAS.

The overshoot for the NAS was 88x: 5x for energy-efficient {hardware} in Google knowledge facilities and 18.7x for computation utilizing proxies. The precise CO2e for the one-time search had been 3,223 kg versus 284,019 kg, 88x lower than the printed estimate.

Sadly, some subsequent papers misinterpreted the NAS estimate because the coaching price for the mannequin it found, but emissions for this specific NAS are ~1300x bigger than for coaching the mannequin. These papers estimated that coaching the Developed Transformer mannequin takes two million GPU hours, prices hundreds of thousands of {dollars}, and that its carbon emissions are equal to 5 instances the lifetime emissions of a automobile. In actuality, coaching the Developed Transformer mannequin on the duty examined by the UMass researchers and following the 4M greatest practices takes 120 TPUv2 hours, prices $40, and emits solely 2.4 kg (0.00004 automobile lifetimes), 120,000x much less. This hole is almost as giant as if one overestimated the CO2e to manufacture a automobile by 100x after which used that quantity because the CO2e for driving a automobile.

Outlook

Local weather change is necessary, so we should get the numbers proper to make sure that we deal with fixing the most important challenges. Inside info expertise, we imagine these are more likely the lifecycle prices — i.e., emission estimates that embody the embedded carbon emitted from manufacturing all elements concerned, from chips to knowledge middle buildings — of producing computing gear of every type and sizes1 slightly than the operational price of ML coaching.

Count on extra excellent news if everybody improves the 4Ms. Whereas these numbers might at present differ throughout corporations, these easy measures could be adopted throughout the trade:

If the 4Ms grow to be widely known, we predict a virtuous circle that can bend the curve in order that the worldwide carbon footprint of ML coaching is definitely shrinking, not rising.

Acknowledgements

Let me thank my co-authors who stayed with this lengthy and winding investigation into a subject that was new to most of us: Jeff Dean, Joseph Gonzalez, Urs Hölzle, Quoc Le, Chen Liang, Lluis-Miquel Munguia, Daniel Rothchild, David So, and Maud Texier. We additionally had an excessive amount of assist from others alongside the way in which for an earlier examine that ultimately led to this model of the paper. Emma Strubell made a number of ideas for the prior paper, together with the advice to look at the latest large NLP fashions. Christopher Berner, Ilya Sutskever, OpenAI, and Microsoft shared details about GPT-3. Dmitry Lepikhin and Zongwei Zhou did an excessive amount of work to measure the efficiency and energy of GPUs and TPUs in Google knowledge facilities. Hallie Cramer, Anna Escuer, Elke Michlmayr, Kelli Wright, and Nick Zakrasek helped with the information and insurance policies for vitality and CO2e emissions at Google.

[ad_2]