Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

[ad_1]

Analysis within the discipline of machine studying and AI, now a key expertise in virtually each business and firm, is way too voluminous for anybody to learn all of it. This column, Perceptron, goals to gather among the most related current discoveries and papers — significantly in, however not restricted to, synthetic intelligence — and clarify why they matter.

On this batch of current analysis, Meta open-sourced a language system that it claims is the primary able to translating 200 completely different languages with “state-of-the-art” outcomes. To not be outdone, Google detailed a machine studying mannequin, Minerva, that may clear up quantitative reasoning issues together with mathematical and scientific questions. And Microsoft launched a language mannequin, Godel, for producing “life like” conversations that’s alongside the traces of Google’s extensively publicized Lamda. After which we have now some new text-to-image mills with a twist.

Meta’s new mannequin, NLLB-200, is part of the corporate’s No Language Left Behind initiative to develop machine-powered translation capabilities for a lot of the world’s languages. Educated to grasp languages akin to Kamba (spoken by the Bantu ethnic group) and Lao (the official language of Laos), in addition to over 540 African languages not supported effectively or in any respect by earlier translation techniques, NLLB-200 will probably be used to translate languages on the Fb Information Feed and Instagram along with the Wikimedia Basis’s Content material Translation Software, Meta lately introduced.

AI translation has the potential to vastly scale — and already has scaled– the variety of languages that may be translated with out human experience. However as some researchers have famous, errors spanning incorrect terminology, omissions, and mistranslations can crop up in AI-generated translations as a result of the techniques are skilled largely on information from the web — not all of which is high-quality. For instance, Google Translate as soon as presupposed that medical doctors had been male whereas nurses had been feminine, whereas Bing’s translator translated phrases like “the desk is comfortable” as the female “die Tabelle” in German (which refers a desk of figures).

For NLLB-200, Meta stated it “fully overhauled” its information cleansing pipeline with “main filtering steps” and toxicity-filtering lists for the complete set of 200 languages. It stays to be seen how effectively it really works in apply, however — because the Meta researchers behind NLLB-200 acknowledge in an educational paper describing their strategies — no system is totally freed from biases.

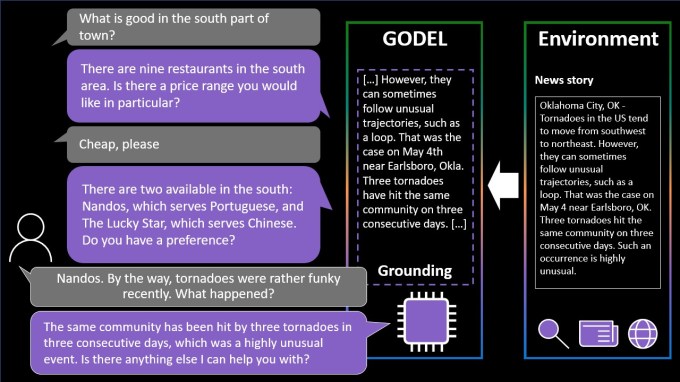

Godel, equally, is a language mannequin skilled on an unlimited quantity of textual content from the online. Nevertheless, not like NLLB-200, Godel was designed to deal with “open” dialogue — conversations a few vary of various subjects.

Picture Credit: Microsoft

Godel can reply a query a few restaurant or have a back-and-forth dialogue a few explicit topic, akin to a neighborhood’s historical past or a current sports activities recreation. Usefully, and like Google’s Lamda, the system can draw on content material from across the net that wasn’t part of the coaching information set, together with restaurant opinions, Wikipedia articles, and different content material on public web sites.

However Godel encounters the identical pitfalls as NLLB-200. In a paper, the group accountable for creating it notes that it “could generate dangerous responses” owing to the “types of social bias and different toxicity” within the information used to coach it. Eliminating, and even mitigating, these biases stays an unsolved problem within the discipline of AI — a problem which may by no means be fully solved.

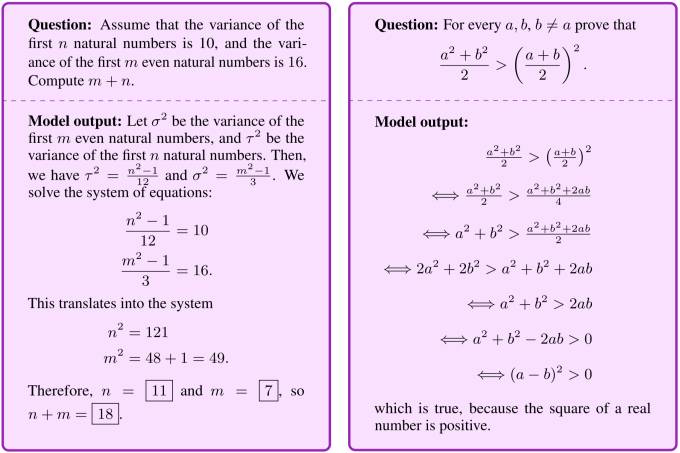

Google’s Minerva mannequin is much less doubtlessly problematic. Because the group behind it describes in a weblog submit, the system discovered from a knowledge set of 118GB scientific papers and net pages containing mathematical expressions to resolve quantitative reasoning issues with out utilizing exterior instruments like a calculator. Minerva can generate options that embrace numerical calculations and “symbolic manipulation,” attaining main efficiency on widespread STEM benchmarks.

Minerva isn’t the primary mannequin developed to resolve these kind of issues. To call just a few, Alphabet’s DeepMind demonstrated a number of algorithms that may support mathematicians in advanced and summary duties, and OpenAI has experimented with a system skilled to resolve grade school-level math issues. However Minerva incorporates current strategies to higher clear up mathematical questions, the group says, together with an strategy that includes “prompting” the mannequin with a number of step-by-step options to present questions earlier than presenting it with a brand new query.

Picture Credit: Google

Minerva nonetheless makes its justifiable share of errors, and typically it arrives at an accurate last reply however with defective reasoning. Nonetheless, the group hopes that it’ll function a basis for fashions that “assist push the frontiers of science and training.”

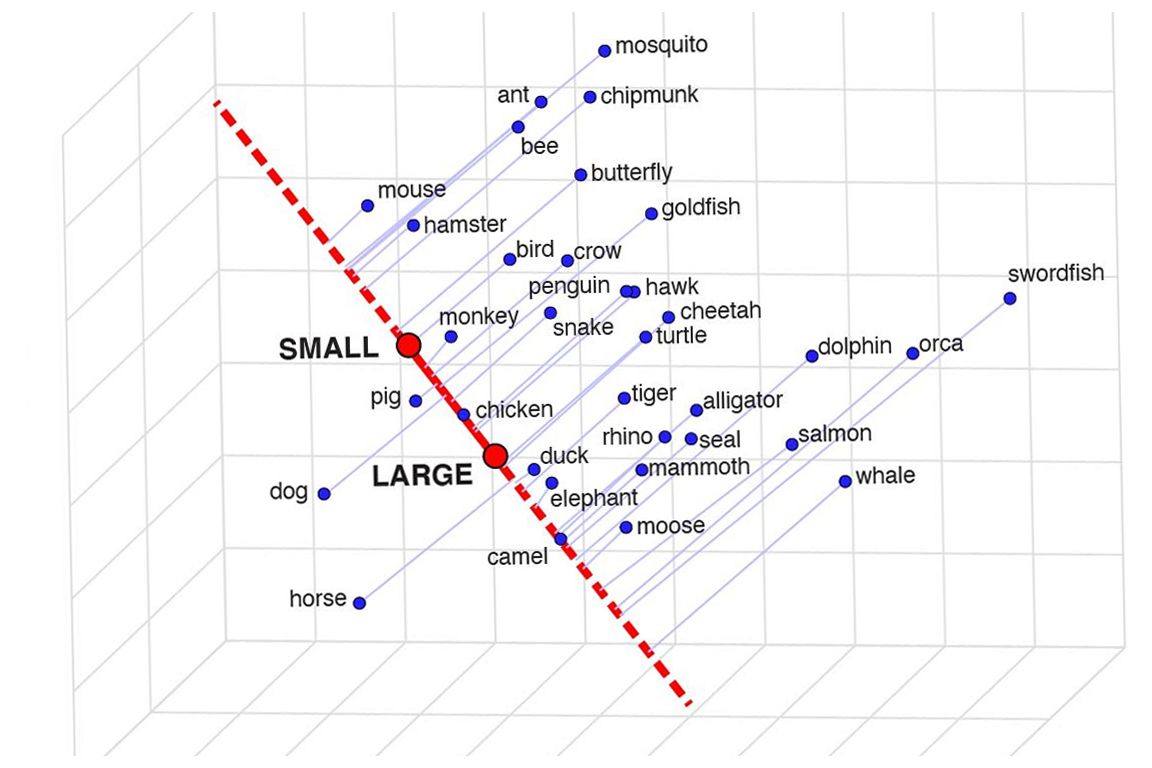

The query of what AI techniques truly “know” is extra philosophical than technical, however how they set up that data is a good and related query. For instance, an object recognition system could present that it “understands” that housecats and tigers are related in some methods by permitting the ideas to overlap purposefully in the way it identifies them — or possibly it doesn’t actually get it and the 2 varieties of creatures are completely unrelated to it.

Researchers at UCLA wished to see if language fashions “understood” phrases in that sense, and developed a way referred to as “semantic projection” that implies that sure, they do. When you can’t merely ask the mannequin to clarify how and why a whale is completely different from a fish, you may see how carefully it associates these phrases with different phrases, like mammal, massive, scales, and so forth. If whale associates extremely with mammal and huge however not with scales, you recognize it’s obtained an honest concept of what it’s speaking about.

An instance of the place animals fall on the small to massive spectrum as conceptualized by the mannequin.

As a easy instance, they discovered animal coincided with the ideas of measurement, gender, hazard, and wetness (the choice was a bit bizarre) whereas states coincided with climate, wealth, and partisanship. Animals are nonpartisan and states are genderless, so that every one tracks.

There’s no surer check proper now as as to whether a mannequin understands some phrases than asking it to attract them — and text-to-image fashions preserve getting higher. Google’s “Pathways Autoregressive Textual content-to-Picture” or Parti mannequin appears to be like to be among the best but, but it surely’s tough to check it to the competitors (DALL-E et al.) with out entry, which is one thing few of the fashions supply. You’ll be able to learn in regards to the Parti strategy right here, at any price.

One attention-grabbing facet of the Google write-up is displaying how the mannequin works with growing numbers of parameters. See how the picture improves progressively because the numbers improve:

The immediate was “A portrait picture of a kangaroo carrying an orange hoodie and blue sun shades standing on the grass in entrance of the Sydney Opera Home holding an indication on the chest that claims Welcome Mates!”

Does this imply the very best fashions will all have tens of billions of parameters, which means they’ll take ages to coach and run solely on supercomputers? For now, certain — it’s kind of a brute pressure strategy to enhancing issues, however the “tick-tock” of AI signifies that the subsequent step isn’t to simply make it larger and higher, however to make it smaller and equal. We’ll see who manages to tug that off.

Not one to be not noted of the enjoyable, Meta additionally confirmed off a generative AI mannequin this week, although one which it claims provides extra company to artists utilizing it. Having performed with these mills so much myself, a part of the enjoyable is seeing what it comes up with, however they ceaselessly give you nonsensical layouts or don’t “get” the immediate. Meta’s Make-A-Scene goals to repair that.

Animation of various generated pictures from the identical textual content and sketch immediate.

It’s not fairly an authentic concept – you paint in a fundamental silhouette of what you’re speaking about and it makes use of that as a basis for producing a picture on high of. We noticed one thing like this in 2020 with Google’s nightmare generator. This can be a related idea however scaled as much as permit it to create life like pictures from textual content prompts utilizing the sketch as a foundation however with plenty of room for interpretation. May very well be helpful for artists who’ve a common concept of what they’re pondering of however wish to embrace the mannequin’s unbounded and bizarre creativity.

Like most of those techniques, Make-A-Scene isn’t truly obtainable for public use, since just like the others it’s fairly grasping computation-wise. Don’t fear, we’ll get respectable variations of these items at house quickly.

[ad_2]