Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

[ad_1]

Knowledge scientists have used the DataRobot AI Cloud platform to construct time sequence fashions for a number of years. Not too long ago, new forecasting options and an improved integration with Google BigQuery have empowered information scientists to construct fashions with better pace, accuracy, and confidence. This alignment between DataRobot and Google BigQuery helps organizations extra shortly uncover impactful enterprise insights.

Forecasting is a vital a part of making selections each single day. Employees estimate how lengthy it’ll take to get to and from work, then prepare their day round that forecast. Folks eat climate forecasts and resolve whether or not to seize an umbrella or skip that hike. On a private degree, you’re producing and consuming forecasts each day with a view to make higher selections.

It’s the identical for organizations. Forecasting demand, turnover, and money movement are crucial to holding the lights on. The simpler it’s to construct a dependable forecast, the higher your group’s likelihood is of succeeding. Nonetheless, tedious and redundant duties in exploratory information evaluation, mannequin improvement, and mannequin deployment can stretch the time to worth of your machine studying initiatives. Actual-world complexity, scale, and siloed processes amongst groups may also add challenges to your forecasting.

The DataRobot platform continues to reinforce its differentiating time sequence modeling capabilities. It takes one thing that’s arduous to do however essential to get proper — forecasting — and supercharges information scientists. With automated characteristic engineering, automated mannequin improvement, and extra explainable forecasts, information scientists can construct extra fashions with extra accuracy, pace, and confidence.

When used along with Google BigQuery, DataRobot takes a formidable set of instruments and scales them to deal with a few of the greatest issues going through enterprise and organizations immediately. Earlier this month, DataRobot AI Cloud achieved the Google Cloud Prepared – BigQuery Designation from Google Cloud. This designation offers our mutual clients a further degree of confidence that DataRobot AI Cloud works seamlessly with BigQuery to generate much more clever enterprise options.

To know how DataRobot AI Cloud and Huge Question can align, let’s discover how DataRobot AI Cloud Time Collection capabilities assist enterprises with three particular areas: segmented modeling, clustering, and explainability.

Forecasting the long run is tough. Ask anybody who has tried to “sport the inventory market” or “purchase crypto on the proper time.” Even meteorologists battle to forecast the climate precisely. That’s not as a result of individuals aren’t clever. That’s as a result of forecasting is extraordinarily difficult.

As information scientists may put it, including a time element to any information science downside makes issues considerably more durable. However that is essential to get proper: your group must forecast income to make selections about what number of staff it could possibly rent. Hospitals must forecast occupancy to know if they’ve sufficient room for sufferers. Producers have a vested curiosity in forecasting demand to allow them to fulfill orders.

Getting forecasts proper issues. That’s why DataRobot has invested years constructing time sequence capabilities like calendar performance and automatic characteristic derivation that empowers its customers to construct forecasts shortly and confidently. By integrating with Google BigQuery, these time sequence capabilities will be fueled by large datasets.

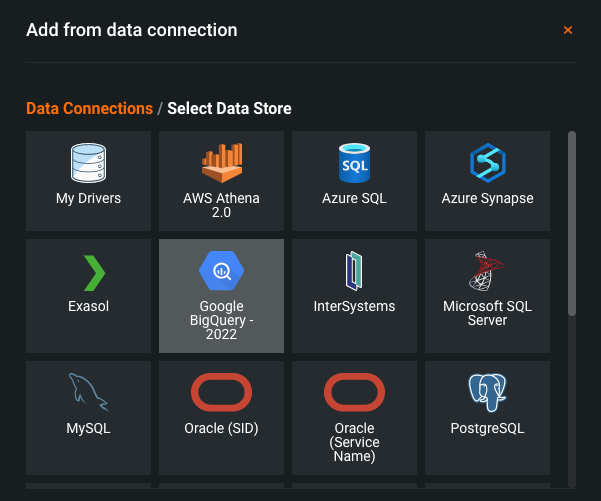

There are two choices to combine Google BigQuery information and the DataRobot platform. Knowledge scientists can leverage their SQL abilities to affix their very own datasets with Google BigQuery publicly out there information. Much less technical customers can use DataRobot Google BigQuery integration to effortlessly choose information saved in Google BigQuery to kick off forecasting fashions.

When information scientists are launched to forecasting, they be taught phrases like “pattern” and “seasonality.” They match linear fashions or be taught concerning the ARIMA mannequin as a “gold customary.” Even immediately, these are highly effective items of many forecasting fashions. However in our fast-paced world the place our fashions should adapt shortly, information scientists and their stakeholders want extra — extra characteristic engineering, extra information, and extra fashions.

For instance, retailers across the U.S. acknowledge the significance of inflation on the underside line. In addition they perceive that the influence of inflation will most likely differ from retailer to retailer. That’s: in case you have a retailer in Baltimore and a retailer in Columbus, inflation may have an effect on your Baltimore retailer’s backside line in a different way than your Columbus retailer’s backside line.

If the retailer has dozens of shops, information scientists won’t have weeks to construct a separate income forecast for every retailer and nonetheless ship well timed insights to the enterprise. Gathering the information, cleansing it, splitting it, constructing fashions, and evaluating them for every retailer is time-consuming. It’s additionally a guide course of, growing the possibility of constructing a mistake. That doesn’t embody the challenges of deploying a number of fashions, producing predictions, taking actions primarily based on predictions, and monitoring fashions to verify they’re nonetheless correct sufficient to depend on as conditions change.

The DataRobot platform’s segmented modeling characteristic affords information scientists the power to construct a number of forecasting fashions concurrently. This takes the redundant, time-consuming work of making a mannequin for every retailer, SKU, or class, and reduces that work to a handful of clicks. Segmented modeling in DataRobot empowers our information scientists to construct, consider, and examine many extra fashions than they may manually.

With segmented modeling, DataRobot creates a number of initiatives “underneath the hood.” Every mannequin is restricted to its personal information — that’s, your Columbus retailer forecast is constructed on Columbus-specific information and your Baltimore retailer forecast is constructed on Baltimore-specific information. Your retail crew advantages by having forecasts tailor-made to the result you wish to forecast, quite than assuming that the impact of inflation goes to be the identical throughout your whole shops.

The advantages of segmented modeling transcend the precise model-building course of. While you convey your information in — whether or not it’s by way of Google BigQuery or your on-premises database — the DataRobot platform’s time sequence capabilities embody superior automated characteristic engineering. This is applicable to segmented fashions, too. The retail fashions for Columbus and Baltimore may have options engineered particularly from Columbus-specific and Baltimore-specific information. If you happen to’re working with even a handful of shops, this characteristic engineering course of will be time-consuming.

The time-saving advantages of segmented modeling additionally lengthen to deployments. Fairly than manually deploying every mannequin individually, you possibly can deploy every mannequin in a few clicks at one time. This helps to scale the influence of every information scientist’s time and shortens the time to get fashions into manufacturing.

As we’ve described segmented modeling up to now, customers outline their very own segments, or teams of sequence, to mannequin collectively. When you have 50,000 totally different SKUs, you possibly can construct a definite forecast for every SKU. You may as well manually group sure SKUs collectively into segments primarily based on their retail class, then construct one forecast for every phase.

However typically you don’t wish to depend on human instinct to outline segments. Perhaps it’s time-consuming. Perhaps you don’t have an amazing thought as to how segments needs to be outlined. That is the place clustering is available in.

Clustering, or defining teams of comparable gadgets, is a steadily used instrument in an information scientist’s toolkit. Including a time element makes clustering considerably tougher. Clustering time sequence requires you to group total sequence of information, not particular person observations. The way in which we outline distance and measure “similarity” in clusters will get extra difficult.

The DataRobot platform affords the distinctive means to cluster time sequence into teams. As a consumer, you possibly can cross in your information with a number of sequence, specify what number of clusters you need, and the DataRobot platform will apply time sequence clustering strategies to generate clusters for you.

For instance, suppose you may have 50,000 SKUs. The demand for some SKUs follows related patterns. For instance, bathing fits and sunscreen are most likely purchased loads throughout hotter seasons and fewer steadily in colder or wetter seasons. If people are defining segments, an analyst may put bathing fits right into a “clothes” phase and sunscreen right into a “lotion” phase. Utilizing the DataRobot platform to mechanically cluster related SKUs collectively, the platform can choose up on these similarities and place bathing fits and sunscreen into the identical cluster. With the DataRobot platform, clustering occurs at scale. Grouping 50,000 SKUs into clusters isn’t any downside.

Clustering time sequence in and of itself generates a whole lot of worth for organizations. Understanding SKUs with related shopping for patterns, for instance, may help your advertising crew perceive what kinds of merchandise needs to be marketed collectively.

Inside the DataRobot platform, there’s a further profit to clustering time sequence: these clusters can be utilized to outline segments for segmented modeling. This implies DataRobot AI offers you the power to construct segmented fashions primarily based on cluster-defined segments or primarily based on human-defined segments.

As skilled information scientists, we perceive that modeling is barely a part of our work. But when we are able to’t talk insights to others, our fashions aren’t as helpful as they could possibly be. It’s additionally essential to have the ability to belief the mannequin. We wish to keep away from that “black field AI” the place it’s unclear why sure selections have been made. If we’re constructing forecasts that may have an effect on sure teams of individuals, as information scientists we have to know the restrictions and potential biases in our mannequin.

The DataRobot platform understands this want and, because of this, has embedded explainability throughout the platform. In your forecasting fashions, you’re capable of perceive how your mannequin is acting at a world degree, how your mannequin performs for particular time durations of curiosity, what options are most essential to the mannequin as a complete, and even what options are most essential to particular person predictions.

In conversations with enterprise stakeholders or the C-suite, it’s useful to have fast summaries of mannequin efficiency, like accuracy, R-squared, or imply squared error. In time sequence modeling, although, it’s crucial to know how that efficiency adjustments over time. In case your mannequin is 99% correct however repeatedly will get your greatest gross sales cycles improper, it may not truly be mannequin for your corporation functions.

The DataRobot Accuracy Over Time chart exhibits a transparent image of how a mannequin’s efficiency adjustments over time. You may simply spot “large misses” the place predictions don’t line up with the precise values. You may as well tie this again to calendar occasions. In a retail context, holidays are sometimes essential drivers of gross sales habits. We are able to simply see if gaps are inclined to align with holidays. If that is so, this may be useful details about tips on how to enhance your fashions — for instance, by way of characteristic engineering — and when our fashions are most dependable. The DataRobot platform can mechanically engineer options primarily based on holidays and different calendar occasions.

To go deeper, you may ask, “Which inputs have the most important influence on our mannequin’s predictions?” The DataRobot Characteristic Influence tab communicates precisely which inputs have the most important influence on mannequin predictions, rating every of the enter options by how a lot they globally contributed to predictions. Recall that DataRobot automates the characteristic engineering course of for you. When inspecting the impact of varied options, you possibly can see each the unique options (i.e., pre-feature engineering) and the derived options that DataRobot created. These insights provide you with extra readability on mannequin habits and what drives the result you’re attempting to forecast.

You may go even deeper. For every prediction, you possibly can quantify the influence of options on that particular person prediction utilizing DataRobot Prediction Explanations. Fairly than seeing an outlier that calls your mannequin into query, you possibly can discover unexpectedly excessive and low values to know why that prediction is what it’s. On this instance, the mannequin has estimated {that a} given retailer may have about $46,000 in gross sales on a given day. The Prediction Explanations tab communicates that the principle options influencing this prediction are:

You may see that this explicit gross sales worth for this explicit retailer was influenced upward by all the variables, apart from Day of Week, which influenced this prediction downward. Manually doing any such investigation takes a whole lot of time; the Prediction Explanations right here helps to dramatically pace up the investigation of predictions. DataRobot Prediction Explanations are pushed by the proprietary DataRobot XEMP (eXemplar-based Explanations of Mannequin Predictions) methodology.

This scratches the floor on what explainability charts and instruments can be found.

You can begin by pulling information from Google BigQuery and leveraging the immense scale of information that BigQuery can deal with. This contains each information you’ve put into BigQuery and Google BigQuery public datasets that you simply wish to leverage, like climate information or Google Search Traits information. Then, you possibly can construct forecasting fashions within the DataRobot platform on these giant datasets and ensure you’re assured within the efficiency and predictions of your fashions.

When it’s time to place these into manufacturing, the DataRobot platform APIs empower you to generate mannequin predictions and straight export them again into BigQuery. From there, you’re ready to make use of your predictions in BigQuery nonetheless you see match, like displaying your forecasts in a Looker dashboard.

To leverage DataRobot and Google BigQuery collectively, begin by establishing your connection between BigQuery and DataRobot.

Concerning the writer

Principal Knowledge Scientist, Technical Excellence & Product at DataRobot

Matt Brems is Principal Knowledge Scientist, Technical Excellence & Product with DataRobot and is Co-Founder and Managing Associate at BetaVector, an information science consultancy. His full-time skilled information work spans pc imaginative and prescient, finance, training, consumer-packaged items, and politics. Matt earned Common Meeting’s first “Distinguished College Member of the Yr” award out of over 20,000 instructors. He earned his Grasp’s diploma in statistics from Ohio State. Matt is obsessed with mentoring folx in information and tech careers, and he volunteers as a mentor with Coding It Ahead and the Washington Statistical Society. Matt additionally volunteers with Statistics With out Borders, at present serving on their Government Committee and main the group as Chair.

[ad_2]